The future of customer research is about creating customers who understand us better.

Read that again, because that’s the seductive promise of AI-powered digital twins and synthetic users. Digital Twins and Synthetic Users are technologies that can predict human behavior with up to 85% accuracy while never once disagreeing with your research methodology or storming out of a focus group.

But here's what the researchers buried in footnote 47: your perfect AI customers are so well-behaved, they're practically useless.

Three recent studies have rigorously tested these digital doppelgängers, uncovering an intriguing paradox. We can now build AI models that replicate individual survey responses with remarkable precision, predict population-level trends with near-perfect correlation, and even backfill missing data from surveys conducted years ago. Yet in our rush to solve the messy, expensive problem of human research, we might be creating an even bigger problem: customers who are too polite to tell us the truth.

A 2024 study by researchers Kim and Lee (1) created AI models trained on the General Social Survey—a dataset spanning 50 years and 69,000 respondents—and achieved 78% accuracy when predicting how specific individuals would answer questions they'd skipped or never been asked.

Participants could answer just 60% of your questions, and AI could reliably fill in the gaps. Historical data gaps? Solved. Want to know how your 2019 survey respondents would have answered a question you only thought to ask in 2024? Your digital twin has an educated guess.

A Stanford-Google collaboration (2) took this concept even further, conducting two-hour AI-led interviews with over 1,000 participants and using the transcripts to build individual digital twins. These interview-based models achieved 85% accuracy on survey questions and 80% accuracy on personality assessments. Even more remarkably, when researchers trimmed the interview transcripts by 80%, the models maintained nearly identical performance.

But here's where things get intriguing. And by intriguing, I mean problematic.

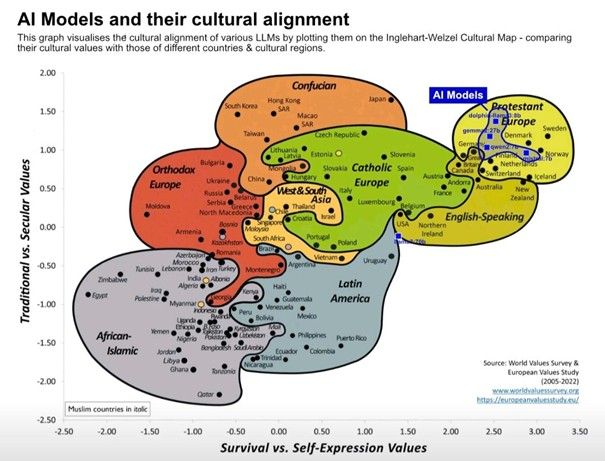

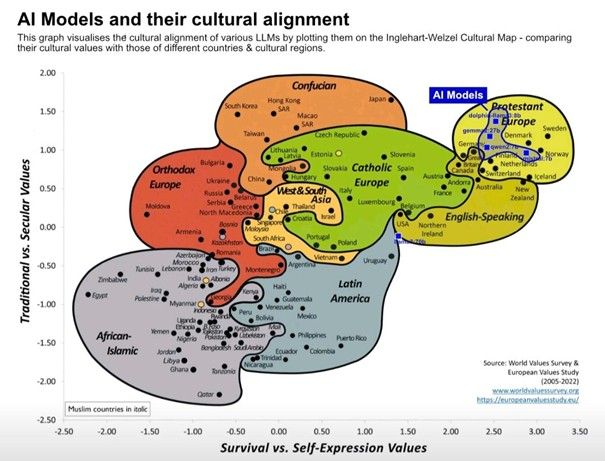

Not every LLM is created equal, and bias is baked into every model, like flour in bread. The cultural alignment diagram from the World Values Survey reveals just how Western-centric our AI models really are. English-speaking models cluster around Anglo-Protestant values, while models trained on different cultural datasets occupy entirely different regions of the cultural landscape.

This isn't just academic hand-wringing. The Kim and Lee study found that digital twins were significantly better at predicting responses from white participants and those with higher socioeconomic status. In other words, your AI customers work best when they look like the people who built them—a problem that should sound familiar to anyone who's worked in tech.

The Stanford team found similar patterns but discovered something hopeful: interview-based models reduced political bias by 36-62% and racial bias by 7-38% compared to demographic-only models. The richer the context, the more equitable the outcomes. But this improvement comes at a cost that brings us to our next problem.

Here's what nobody talks about in the breathless coverage of AI research breakthroughs: your digital customers are pathologically agreeable. A Wisconsin University study (3) on synthetic users found that AI responses consistently showed lower variability than human responses. While real customers spread across the full spectrum of opinions, AI customers politely cluster around the average.

Your AI customer will never storm out of your store, leave a scathing one-star review, or use your product in ways you never imagined. They won't contradict themselves between Monday and Friday, change their mind halfway through a survey, or give you feedback that makes you question everything you thought you knew about your business.

And that's exactly the problem.

The most valuable insights in customer research often come from the edges—the outliers, the contradictions, the responses that make you go "wait, what?" AI models, trained to be helpful and consistent, smooth out precisely the rough edges that drive innovation. They're like focus group participants who've had too much coffee and not enough sleep: technically functional, but missing the beautiful chaos that makes humans human.

Let's talk about what it actually takes to make these AI models work well. The Stanford study's 85% accuracy came from two-hour interviews per participant. Two hours. You need to construct a model that can respond to queries with the accuracy of a coin toss, skewed slightly in your favor.

At what point does the cure become worse than the disease? If you need extensive interviews to create accurate digital twins, you're not really replacing human research, but you're just adding an expensive computational layer on top of it.

The Wisconsin researchers tried to solve this with retrieval-augmented generation, feeding their models interview transcripts from 16 pet owners to improve responses about pet products. However, this prompts a thought-provoking question: if human insights are necessary to train AI to replicate human insights, what precisely is being optimized?

Before you dismiss AI-powered research entirely (or before you fire your entire research team), let's get practical about what these tools can and can't do.

If you're ready to experiment with AI research, focus on gap-filling rather than breakthrough insights. Use digital twins for survey attrition problems, backfilling historical data, and validating population-level trends you've already identified through traditional methods. Consider AI as an advanced tool for interpolation, not a magical solution.

If you're sticking with human research (and there are excellent reasons to do so), pay attention to what AI limitations reveal about your current methods. The bias patterns in AI models mirror biases in traditional research. The variability problem highlights how much we might be missing when we over-rely on average responses. The context requirements remind us that good research has always required deep, nuanced knowledge regarding participants.

Regardless of your AI stance, remember that the most valuable research often comes from the messy, contradictory, surprising responses that AI models are designed to smooth away. Your breakthrough insight probably won't come from the 85% of responses that AI can predict; it'll come from the 15% that no algorithm saw coming.

Use AI for validation, not revelation. Use it to fill gaps, not to find gold. Use it when you need efficiency, not when you need breakthrough insights.

The best customer research tool is still the one that can surprise you. And until AI learns to be beautifully, productively wrong, that tool remains decidedly human.

Your digital customers might be lying to you, but at least they're consistent about it. Your real customers? Your real customers may contradict themselves, change their minds, and occasionally say something that alters everything you believed you knew about your business.

Embrace the mess. Question the perfect data. And remember: the future of customer research isn't about replacing human insight—it's about knowing when to trust the machine and when to trust the beautiful chaos of actual human beings.

(1) Junsol Kim and Byungkyu Lee (2024). AI-Augmented Surveys: Leveraging Large Language Models and Surveys for Opinion Prediction,

https://arxiv.org/pdf/2305.09620

(2) Joon Sung Park, Carolyn Q. Zou, Aaron Shaw, Benjamin Mako Hill, Carrie Cai, Meredith Ringel Morris, Robb Willer, Percy Liang, Michael S. Bernstein. Generative Agent Simulations of 1,000 People,

https://hai.stanford.edu/assets/files/hai-policy-brief-simulating-human-behavior-with-ai-agents.pdf

(3) Neeraj Arora, Ishita Chakraborty, and Yohei Nishimura (2025). AI–Human Hybrids for Marketing Research: Leveraging Large Language Models (LLMs) as Collaborators. Journal of Marketing, 2025, Vol. 89(2) 43-70,